The Willful Marionette

Explore Responses to Embodied Interaction

System Description

the willful marionette is an art installation developed as a collaboration between the artists (authors LoCurto and Outcault) and the Interaction Design Lab at the University of North Carolina at Charlotte. The body of one of the artists was 3D-scanned, modified to be articulated like a marionette, and then 3D-printed at approximately half scale. The marionette is controlled by motorized strings, and able to perceive its surroundings with a pair of depth cameras. It was created to interact with participants through non-verbal channels: it senses and interacts solely to bodily motions, recognizing participants gestures and positions. It does not mimic those it observes, instead behaving in a manner that is responsive but unpredictable. The marionette’s strings, materials and exposed electronics make it clearly inhuman, but its form and movement convey a provocative degree of humanity.

My Role: I was assigned to the user evaluation study to define the design principles and the gesture sets for a dialogue that provokes an emotional response. This experience allowed me explores evaluation principles and discover a new set of system design principles that were not tied directly to a screen interface.

Design Goal

The willful marionette is intended to provoke, challenge and confuse, and by doing so construct novel experiences for its participants. This is a highly unlikely design goal for an interface intended primarily to be useful. It is inevitable, however, that such goal directed systems will on occasion confuse or challenge some users.

Design Methods

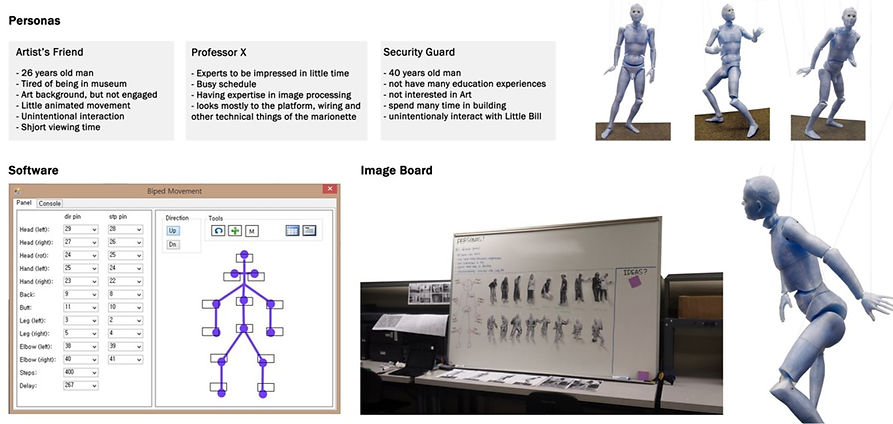

We used the design methods such as body storming, Role-playing, personas, image boards in the early stages of the project to explore and develop ideas for the marionette It enables to generate gesture ideas which can be applied to the real expected interaction with Little Bill. Body storming activity helped our team explore the possible gestures between users and the marionette we could use to create novel interactions. This gave us the opportunity to think about of interactions and determine ourselves not only interaction gestures but also the software and technologies.

Role-playing

Gesture Elicitation Study

In order to gain a deeper understanding of the use of gestural interaction with a reactive humanoid interface and seeks an emotional engagement with users. An elicitation study was conducted with 12 participants (2 females, 9 males and 1 child).

Wizard of Oz

During the study, the marionette performed with response to participants’ gestures by controlling the Wizard of Oz system. For example, the marionette acted like raise head and look directly face. When participants approached to the little bill, he raised his hands like sending signal “don’t come closer”. The marionette also performed tracking actions which is following participant’s direction. The marionette expressed idling actions such as “breathe”, “shake his head”, which represent human-like behaviors.

Results

From our initial elicitation study, Total 119 gestures were performed from 12 participants. We acquired meaningful finding about eye contact is one of the important ways to interact with the marionette. It seems that eye contact makes participants feel communicate or interact with marionette.

System Design

The artistic vision for the piece was to create an interactive humanlike form that provides reflection on the physicality and frailty of the human body. The resulting computer-controlled marionette is derived from a high-resolution 3D scan of the body of one of the artists. This scan was segmented into 17 pieces, and then re-connected by a set of hinge and socket joints in locations corresponding to the joints on the human body. The scan was then 3D printed in PLA plastic at a height of approximately three feet.

Thirteen strings are attached to the body, primarily at the joints, and then each connected to stepper motors. A pair of lifelike doll’s eyes are installed in the head, with eyelids controlled by another motor. The motors are collectively controlled by an Arduino Due™ microcontroller. Two Microsoft Kinect™ sensors are mounted behind the frame that encloses the marionette, facilitating a _180° field of view. These sensors are connected to a PC running software that interprets the gestures and positions of people nearby.

User Study

We conducted a semi-structured qualitative study in which participants interacted freely with the art piece and were then interviewed about their experience. 24 participants consented both to interacting with the marionette and the post-interaction interview. The interview consisted of several questions about their initial experiences, reflections, and characterizations of the marionette, including a question that asked them to rate how engaging it was. 20 participants interacted with the marionette in pairs, with the other 4 doing so alone.

Evaluation

There are three components to our analysis of this data: 1) participant ratings of how engaging the piece was, 2) a quantitative analysis of word frequencies, and 3) a coding analysis of themes and concepts discussed by participants.

Participant engagement

Participants were asked to rate their level of engagement with the marionette. The mean engagement score was 7.875 and the median 7.75.

Word Frequency Analysis

We extracted the words spoken by each participant from the transcripts, removed common English stop-words and used the WordNet lemmatizer to obtain root words.

A word cloud of the participants’ interview responses. Word size is proportional to frequency of use. Blue and red words were used significantly more frequently by high self-reported engagement participants respectively. White words were used equally by either group.

Thematic Analysis

The intent of our qualitative analysis was to determine what broad categories describe the participants’ experiences with the marionette. To determine this we performed phrase-level coding of the transcribed interview data.